Turning imagination into reality through Visual Special Effects

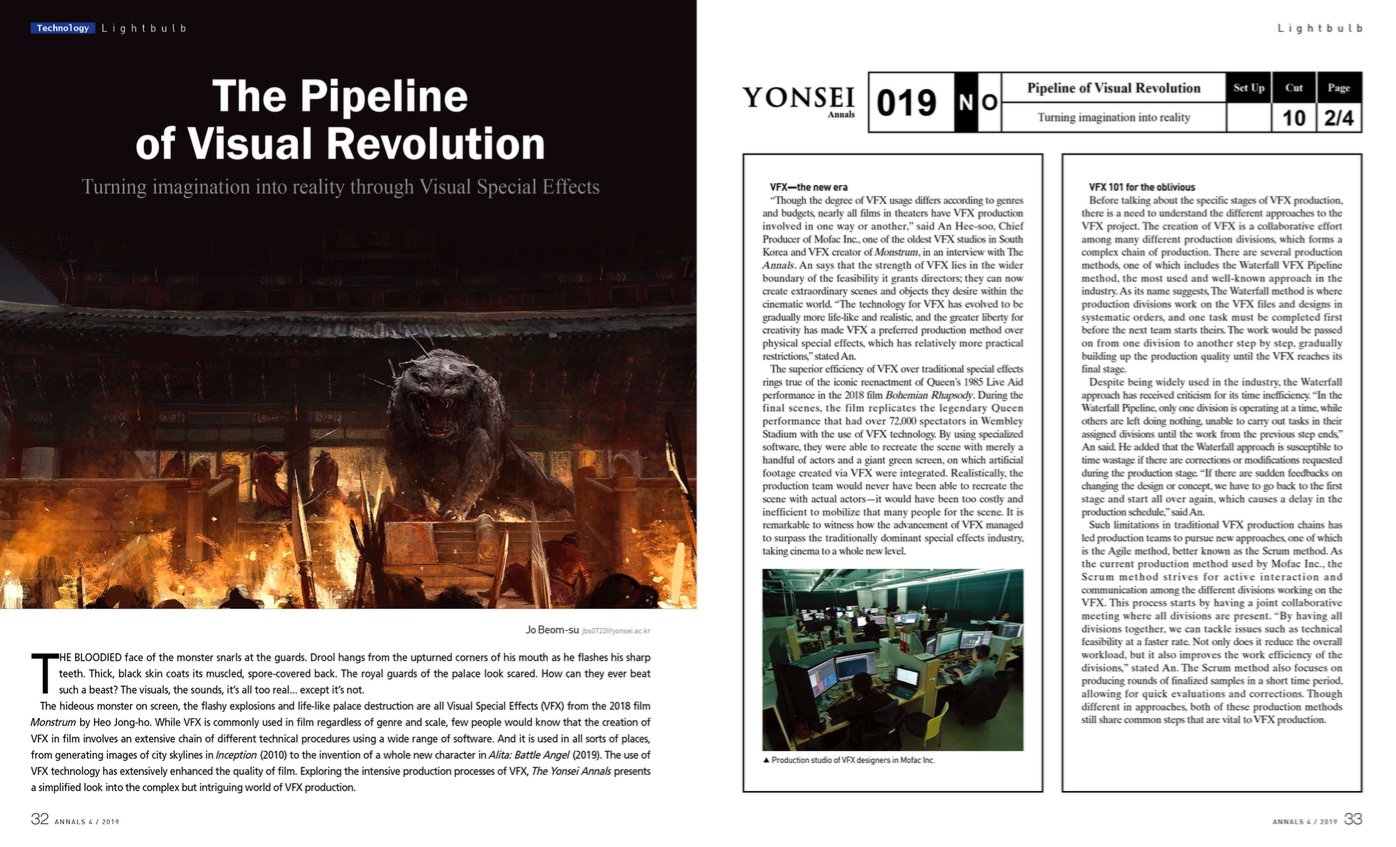

THE BLOODIED face of the monster snarls at the guards. Drool hangs from the upturned corners of his mouth, as he flashes his sharp teeth. Thick, black skin coats its muscled, spore-covered back. The royal guards of the palace look scared. How can they ever beat such a beast? The visuals, the sounds, it’s all too real... except it’s not.

The hideous monster on screen, the flashy explosions and life-like palace destruction are all Visual Special Effects (VFX) from the 2018 film Monstrum by Heo Jong-ho. While VFX is commonly used in film regardless of genre and scale, few people would know that the creation of VFX in film involves an extensive chain of different technical procedures using a wide range of software. And it is used in all sorts of places, from generating images of city skylines in Inception (2010) to the invention of a whole new character in Alita: Battle Angel (2019). The use of VFX technology has extensively enhanced the quality of film. Exploring the intensive production processes of VFX, The Yonsei Annals presents a simplified look into the complex but intriguing world of VFX production.

VFX—the new era

“Though the degree of VFX usage differs according to genres and budgets, nearly all films in theaters have VFX production involved in one way or another,” said An Hee-soo, Chief Producer of Mofac Inc., one of the oldest VFX studios in South Korea and VFX creator of Monstrum, in an interview with The Annals. An says that the strength of VFX lies in the wider boundary of the feasibility it grants directors; they can now create extraordinary scenes and objects they desire within the cinematic world. “The technology for VFX has evolved to be gradually more life-like and realistic, and the greater liberty for creativity has made VFX a preferred production method over physical special effects, which has relatively more practical restrictions,” stated An.

The superior efficiency of VFX over traditional special effects rings true of the iconic reenactment of Queen’s 1985 Live Aid performance in the 2018 film Bohemian Rhapsody. During the final scenes, the film replicates the legendary Queen performance that had over 72,000 spectators in Wembley Stadium with the use of VFX technology. By using specialized software, they were able to recreate the scene with merely a handful of actors and a giant green screen, on which artificial footage created via VFX were integrated. Realistically, the production team would never have been able to recreate the scene with actual actors—it would have been too costly and inefficient to mobilize that many people for the scene. It is remarkable to witness how the advancement of VFX managed to surpass the traditionally dominant special effects industry, taking cinema to a whole new level.

VFX 101 for the oblivious

Before talking about the specific stages of VFX production, there is a need to understand the different approaches to the VFX project. The creation of VFX is a collaborative effort among many different production divisions, which forms a complex chain of production. There are several production methods, one of which includes the Waterfall VFX Pipeline method, the most used and well-known approach in the industry. As its name suggests, The Waterfall method is where production divisions work on the VFX files and designs in systematic orders, and one task must be completed first before the next team starts theirs. The work would be passed on from one division to another step by step, gradually building up the production quality until the VFX reaches its final stage.

Despite being widely used in the industry, the Waterfall approach has received criticism for its time inefficiency. “In the Waterfall Pipeline, only one division is operating at a time, while others are left doing nothing, unable to carry out tasks in their assigned divisions until the work from the previous step ends,” An said. He added that the Waterfall approach is susceptible to time wastage if there are corrections or modifications requested during the production stage. “If there are sudden feedbacks on changing the design or concept, we have to go back to the first stage and start all over again, which causes a delay in the production schedule,” said An.

Such limitations in traditional VFX production chains has led production teams to pursue new approaches, one of which is the Agile method, better known as the Scrum method. As the current production method used by Mofac Inc., the Scrum method strives for active interaction and communication among the different divisions working on the VFX. This process starts by having a joint collaborative meeting where all divisions are present. “By having all divisions together, we can tackle issues such as technical feasibility at a faster rate. Not only does it reduce the overall workload, but it also improves the work efficiency of the divisions,” stated An. The Scrum method also focuses on producing rounds of finalized samples in a short time period, allowing for quick evaluations and corrections. Though different in approaches, both of these production methods still share common steps that are vital to VFX production.

Concept Design

The production of VFX begins with Concept Design, where the images are initially sketched out and visualized. The Art Division collaborates with the film’s director and associated producers to discuss and conceptualize the desired VFX image. To illustrate how Concept Design stage is carried out, An referred to the company’s recent project, Monstrum. “The concept design for Monstrum took inspiration from Hae-tae, a legendary animal from Korean mythology, from we gradually developed our unique designs to create the finalized concept of the beast,” explained An. Once the final concept was agreed upon and approved, the Art Division would work on minor details, such as the number of legs and the color of its fur, to fit the desired concept of the film.

Graphics Processing

After finalizing the concept design, the VFX image moves on to the next stage called Graphics Processing. This stage involves three main tasks: Modeling, Surfacing and Look-Development. Modeling involves using software to produce a three-dimensional computer graphic representation of the image. The 3D model would be built in with effective movement system through Rigging, which ultimately grants the generated 3D computer graphic realistic mobility required for dynamic actions shown in films, which would be tested in later stages of VFX production. “Rigging is necessary to give the 3D model a skeleton, muscles and joints that ultimately determines how the beast walks and runs in the actual animation in later stages of VFX production,” said An.

While the team completes Rigging, another vital process called Surfacing takes place simultaneously. Surfacing focuses on creating the external textures of the 3D model, ranging from skin, wrinkles and even individual hair strands, to ensure that the 3D model appears as realistic as possible. For the beast in Monstrum, the concept of the carnivorous and plague-spreading beast required the Surfacing procedures to create matching visuals for the concept finalized in the Concept Design stage. The Surfacing process would thus pay attention to tiny details with addition of textures such as spores and blood stains to fulfill such requirements.

The assigned VFX designer would then carry out additional calibrations during the process of Look-Development. With the 3D model placed in various environments within the film, digital adjustments are made to the model itself in terms of reflections of lighting and tone to create the desired, realistic representation in different backgrounds shown in the film.

Animation

Animation is the next crucial stage of VFX production. It is responsible for testing the different movements of the 3D model. By using the skeleton, muscles and joints that the team previously installed during the Rigging stage, the 3D models are tested to verify if it is capable of executing the actions and movement that the film requires. “The beast was tested for movements such as walking, running, biting and many other actions that appeared in the film—this stage verifies if the current design fulfills the feasibility requirements for the film,” commented An.

Simulation (FX)

Simulation, also known as FX, is the process that inserts specific elements into the film, such as fire, water and destructions that cannot be constructed and manipulated in real life. During Monstrum, as the destruction of the palace and the resulting explosions are not feasible when shooting live footage, FX creates the desired effects by simulating them through computer-generated graphics.

Lighting and Rendering

Final effects, such as shadows, are then fitted to the generated VFX that match light sources from the actual video footages. During the chaos that ensued during Monstrum, the beast was equipped with shadows that moved and followed the light source produced by the flames. This ensures smooth synchronization of the generated 3D model and FX with the physically produced shots prepared by the director. The team would then send the files for Rendering, where the completed VFX images are converted into video format for the final stage of production.

Composite

The final stage is Composite, which compiles the completed VFX with the footage taken by the director into a single file ready to be inserted into the film. Composite is also responsible for the final arrangements and technical adjustments of the overall film footage. With a final touch up, Composite allows the VFX to blend in naturally with the footage filmed by the director which helps to minimize the feeling of displacement.

VFX technology for the future

Though methods of VFX production have already been established, the VFX industry still strives to innovate by overcoming its technological restrictions and developing new methods to ensure a greater accessibility of VFX technology in films. Virtual Production is one such development. By integrating technology from Virtual Reality and Augmented Reality into the production of films, directors can now see a visualization of the intended VFX image on a digital monitor that shows the VFX in real-time as they film. Virtual Production allows directors and production staff to anticipate the resulted scenes produced after the Composite stage.

Previous to the development of Virtual Production, directors and actors could only imagine the VFX background and any VFX images or characters as they filmed on a set primarily made up of green screen material. Not being able to see the VFX often made filming difficult as any interaction cues, positioning and realness could later be deemed insufficient in post-production. “Virtual Production grants easier film production for everyone—they allow the directors to know what they are filming, while actors can immediately monitor their characters’ portrayals on the screen,” commented An. In the long term, this technological development has allowed greater efficiency in the overall film production because it minimizes the danger of last-minute editing or re-shooting when directors are not content with the final footage.

* * *

Though VFX has become something that we take for granted, the time-consuming stages and complex technology involved offer a new perspective towards the VFX technology. Who would have guessed that a few entertaining minutes of a terrifying beast’s animation were, in fact, the meticulous results of multiple processes? While we often associate technology with the more practical and economic aspects of our society, what VFX technology shows us is that the advancement in technology has also significantly improved the quality of the entertainment we consume. The use of VFX has managed to turn our imaginations into reality, undoubtedly worthy of the title “visual revolution.”

Jo Beom-su

jbs0722@yonsei.ac.kr